“How do you eat an elephant? One bite at a time.”

AGF Funding

Well, it’s finally happened. Since committing my self way back in May 2024 to founding an organisation focussed on implementing high quality algorithmic solutions to important problems, I’ve been slowing chipping away at scaling this idea. From acquiring a large number of projects and student volunteers to registering as a CIC last month, it has come a long way. I got one step further this month, after successfully receiving a grant that will cover my (modest) salary and some organisational operations (compute budget, website hosting, etc.). This is huge news, and what’s more is that we received a larger amount than I originally applied for.

The pressure is now on to deliver on our funding promises and successfully scale while funding is available. In concrete terms, this means acquiring a co-director, recruiting reliable team leads to run the projects, linking up with some high-profile board members, and acquiring on office space for us all to hang out in. I’m planning to get most of this by January, when I will start using the grant.

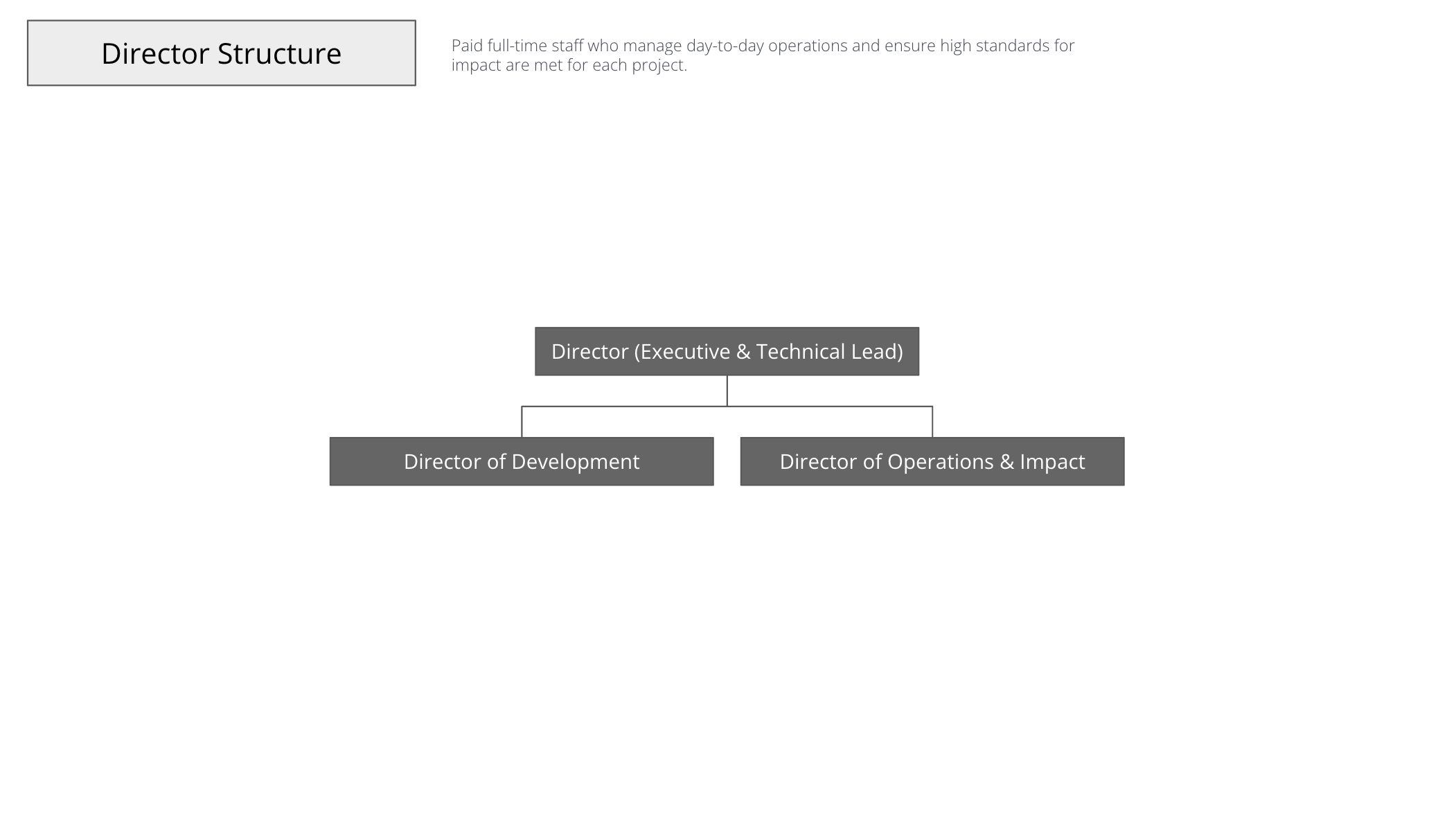

Most key is getting a reliable co-director. The positions I am looking for are as follows:

Director of Development

- Manages fundraising, donor relations, and grant applications.

- Builds partnerships with governments, NGOs, and tech firms.

- Leads marketing, PR, and outreach strategies.

- Ensures projects are implemented effectively in partner organisations.

Director of Operations & Impact

- Oversees volunteer acquisition and community engagement.

- Tracks and reports on impact metrics for funding and transparency.

- Handles administrative tasks, HR, and legal/compliance.

Hiring a good co-director is key and they need to be highly aligned with the AGF’s goals.

Hiring a good co-director is key and they need to be highly aligned with the AGF’s goals.

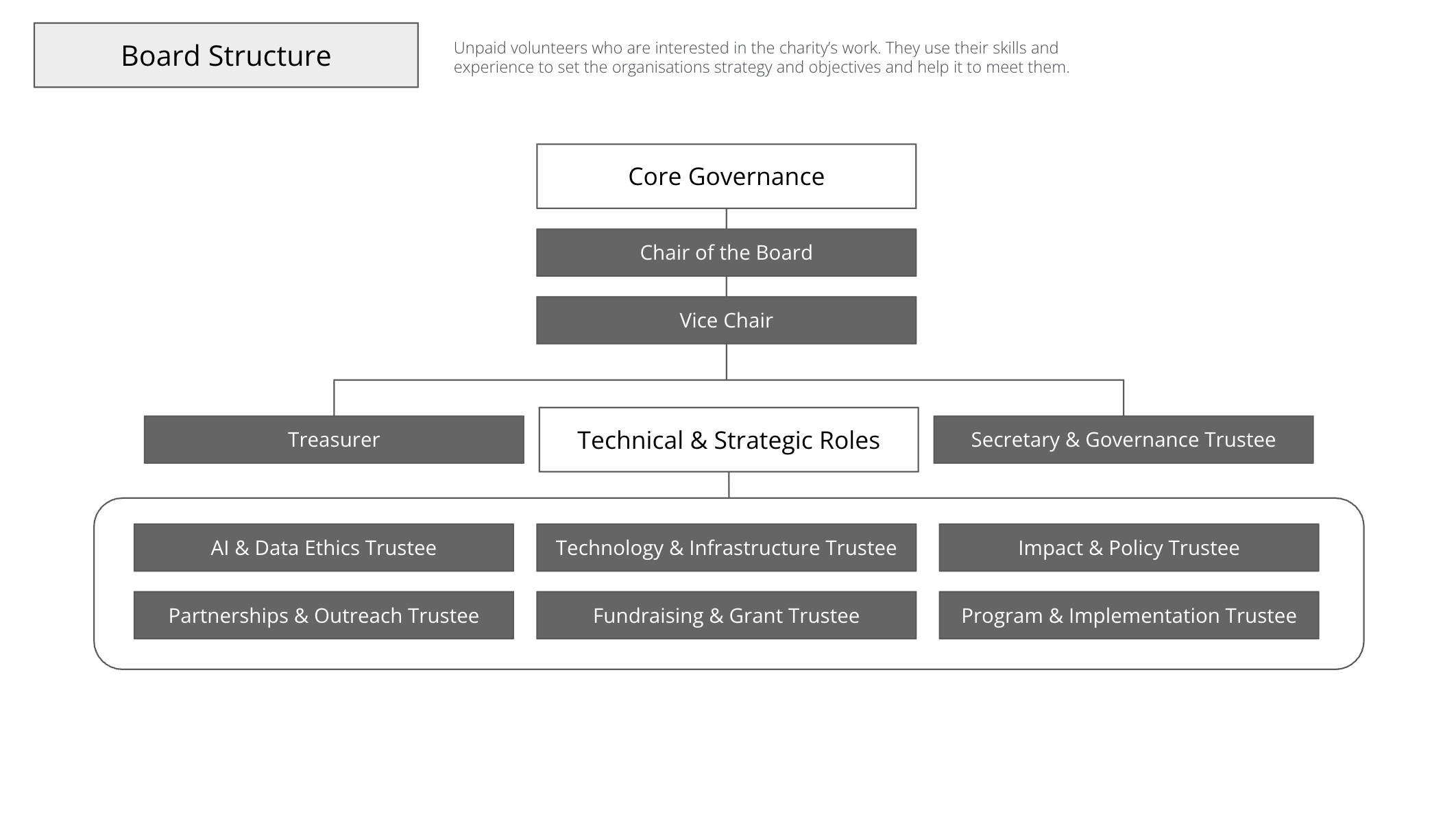

Board members help guide the direction of the organisation, as well as provide advice and connections.

Board members help guide the direction of the organisation, as well as provide advice and connections.

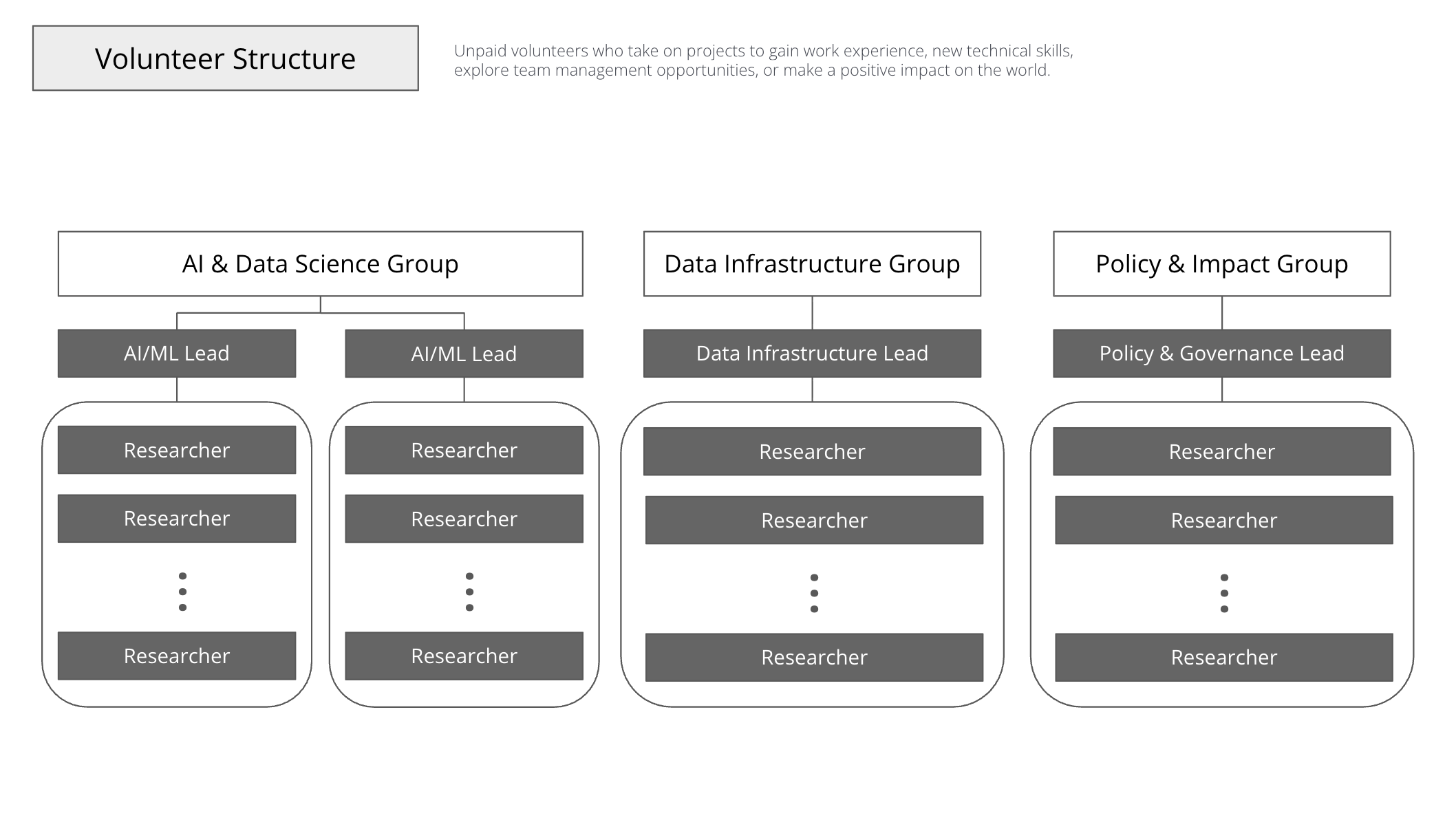

Having volunteers lead projects improves the ability to scale (so I don’t have to micromanage all technical details).

Having volunteers lead projects improves the ability to scale (so I don’t have to micromanage all technical details).

I’ve been keeping very close track of every person and organisation I’ve worked with over the past two years who would have any utility to the AGF, and will be relying on this network for scaling.

Starting the DPhil

In normal circumstances, it would seem like this should be my first and most important update, but in my case I’m somewhat using my DPhil as an excuse to keep my Oxford affiliation (which is literally priceless for getting collaborations with various orgs and academics). I’m mainly working in research areas that I’ve already spent some time on in the past, so I’m cheating a little bit by hitting the ground running in terms of the work I’ve already done.

Rough research plan for the year.

Rough research plan for the year.

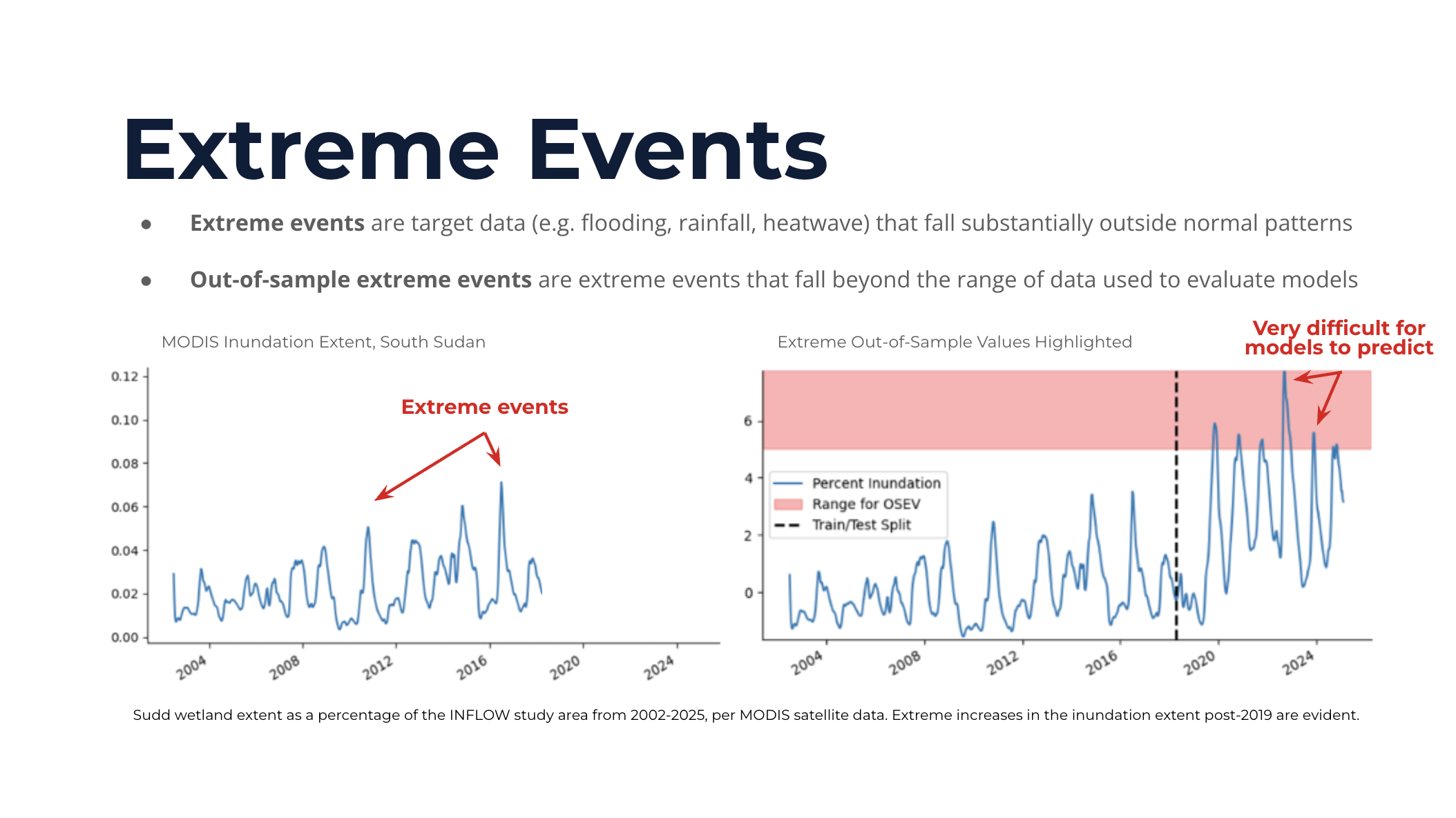

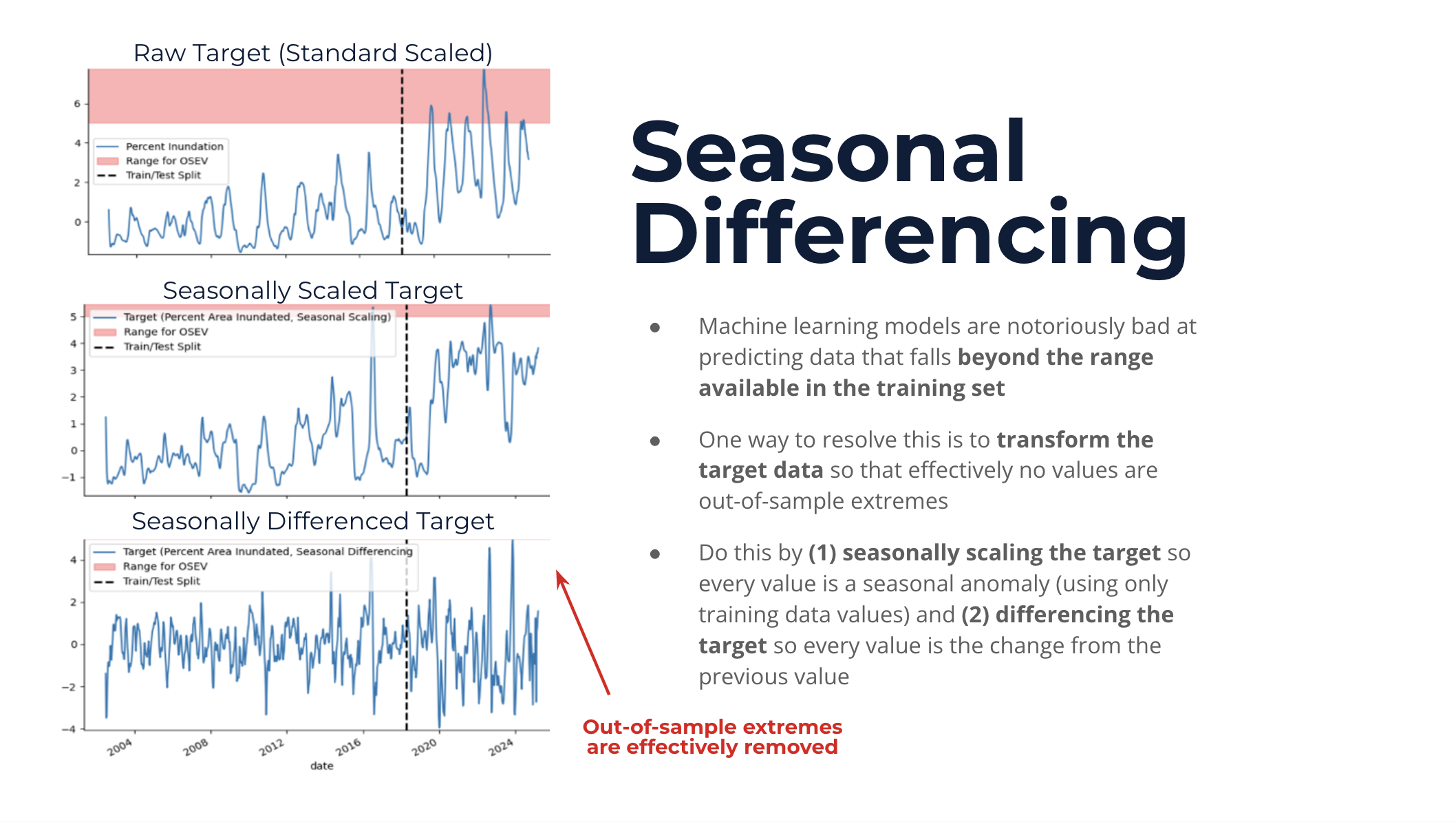

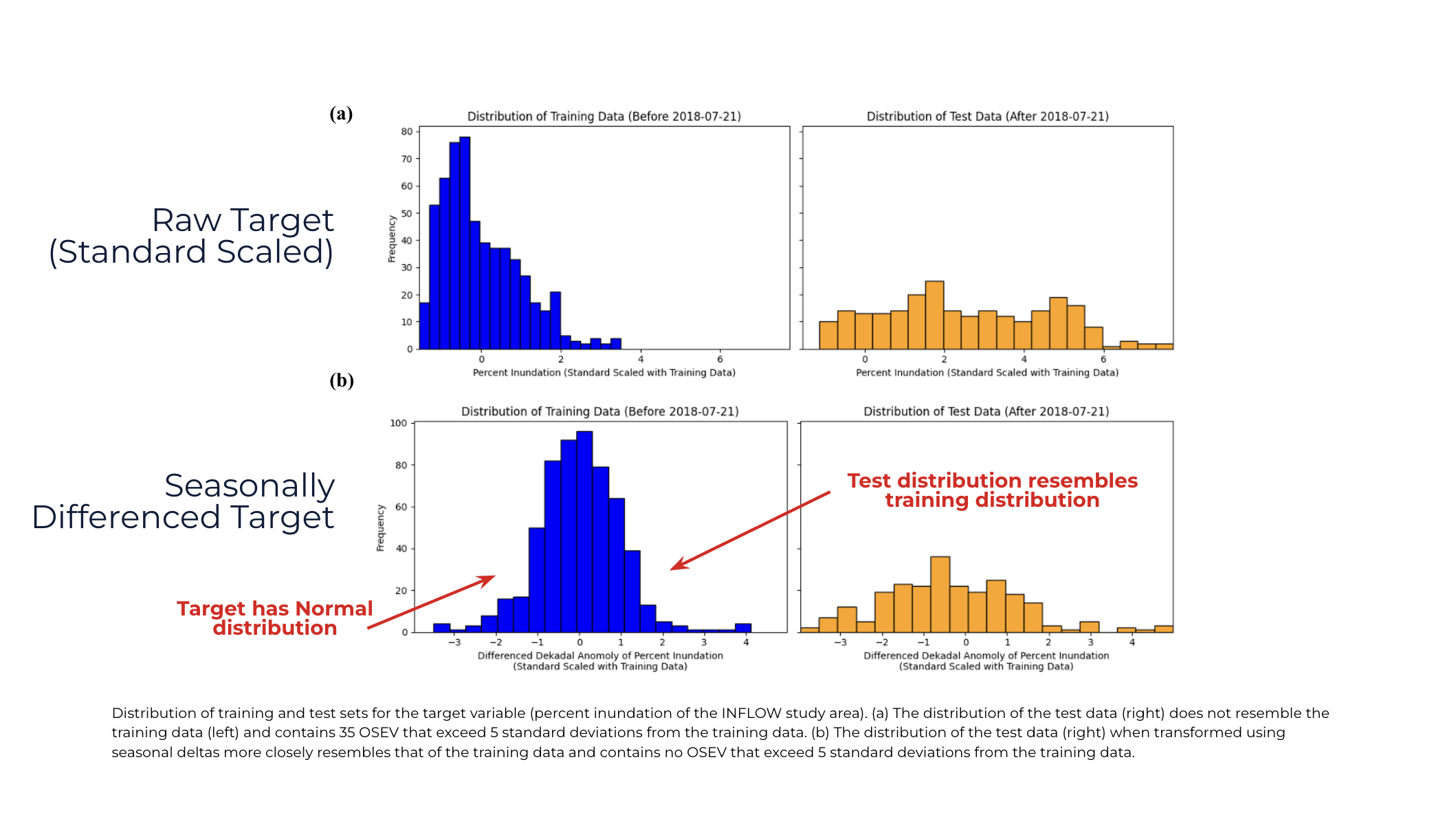

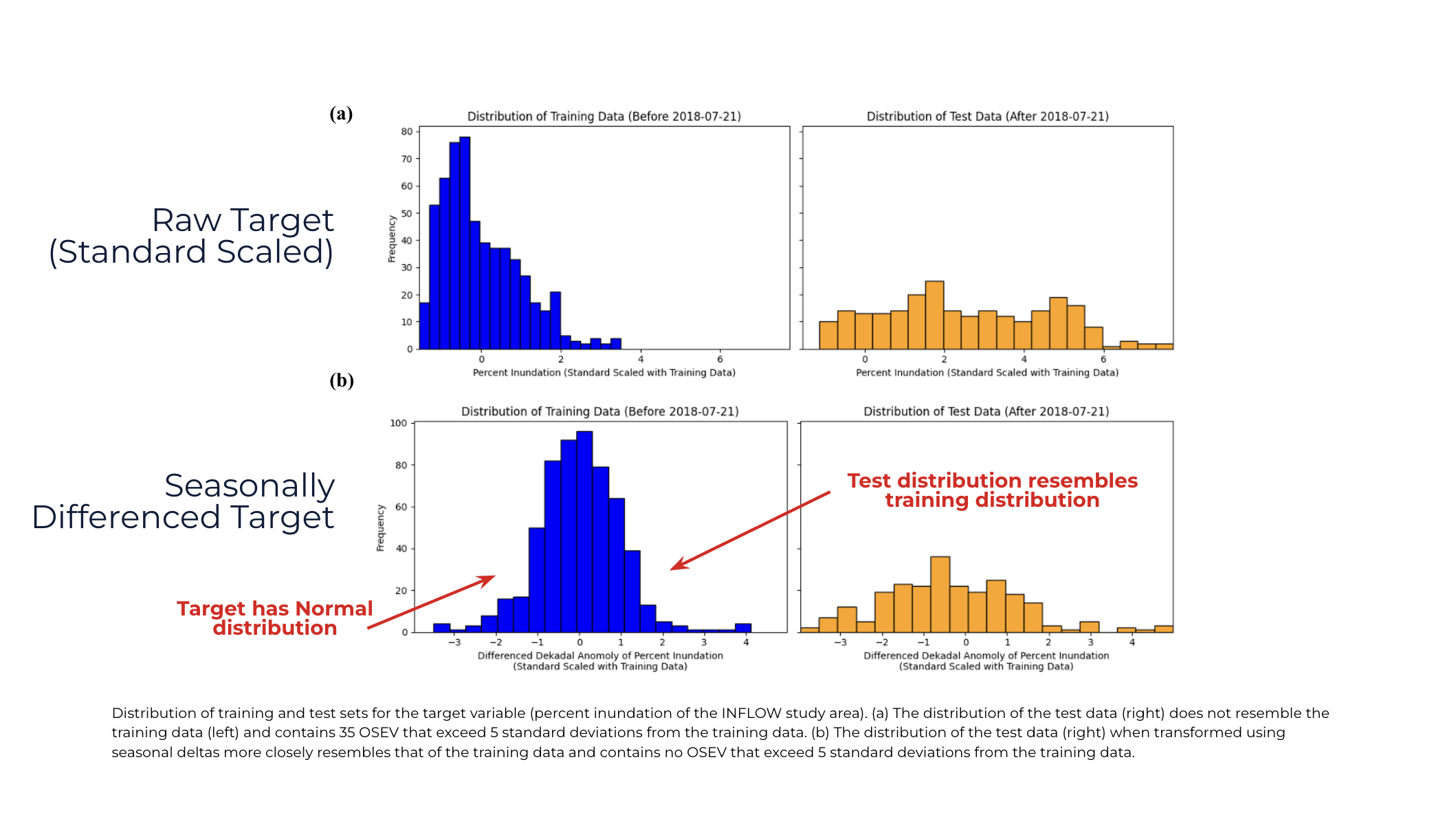

My project for this year will be exploring ways to improve performance of high variance machine learning problems for predicting out-of-sample extreme values (OSEV). These values come up all the time when available data does not represent the entire spectrum of possible values, so after training a model the target value may end up being way outside the distribution it was trained on (e.g. for floods, cyclones, wildfires, etc.).

Example of OSEV values for the White Nile inundation extent in South Sudan.

Example of OSEV values for the White Nile inundation extent in South Sudan.

Machine learning models are very good at capturing complex patterns in the training data (i.e. they have high variance), but as a consequence tend to perform poorly at extrapolating beyond the data they have seen (i.e. they have low bias). There are ways to improve these, as I have already shown with my INFLOW-AI model, presented to my supervisor.

Applying basic time series methods (in this case, first differencing and seasonal averaging) were applied to enable the transformer model to detect OSEV better.

Applying basic time series methods (in this case, first differencing and seasonal averaging) were applied to enable the transformer model to detect OSEV better.

This small change makes the OSEV data resemble the training data way more closely.

This small change makes the OSEV data resemble the training data way more closely.

I don’t expect my research to be groundbreaking, but hopefully it will be at least somewhat useful for the broader algorithmic governance issues I’m more interested in. I just think that for now, I’m having more impact doing applied projects than is possible in academia.

Results showing that this simple method is pretty effective for transformers (high variance model).

Results showing that this simple method is pretty effective for transformers (high variance model).

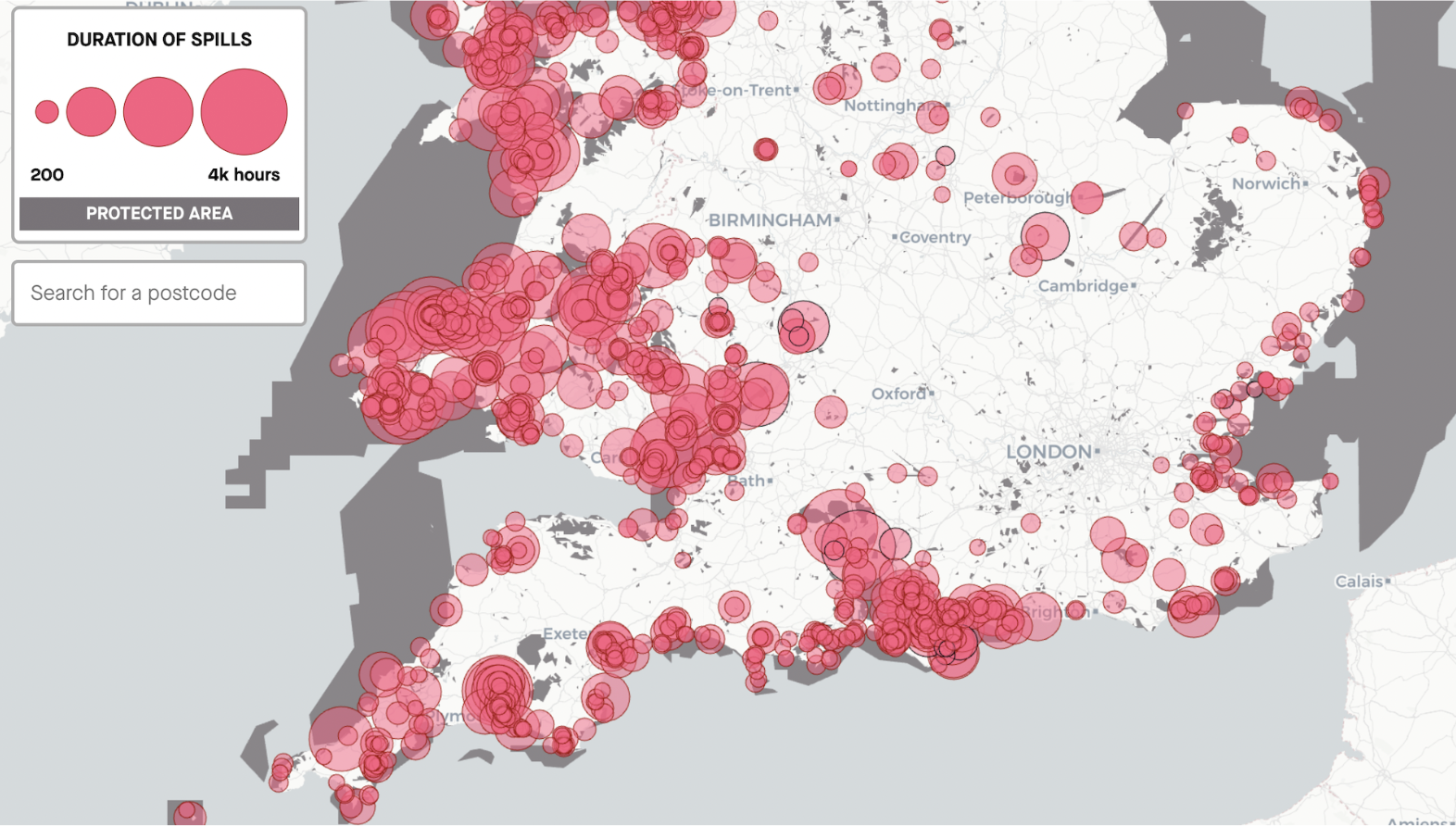

I will also be working with GeoSmart, my external funder, to build them a forecast model and API to better forecast flooding in UK chalk catchments using groundwater data. I’m very grateful to be working on this project, as it is very closely aligned with my interests in using algorithmic methods to improve decision-making for public good (in this case, hopefully improving the ability of water treatment companies, which are private in the UK, to stop dumping tons of raw sewage into the rivers when it rains by enabling better planning for trucks to remove excess sewage before anticipated high flow events).

Map of combined-sewer overflow (CSO) events, the chalk catchments are roughly in the middle near the South coast.

Map of combined-sewer overflow (CSO) events, the chalk catchments are roughly in the middle near the South coast.

I’ll likely be working on this project for Michaelmas (first term) only.

Asking Questions to Important People

One of the perks of living in Oxford is that many interesting and important people visit and engage with forums where you can directly ask them questions. This month I’ve got the chance to pester Carl Benedikt Frey on whether his big economy history hypothesis was prematurely drawing conclusions about the apparent slow uptake of AI for automation and bother David Tilman about whether we should give up on trying to influence American policymakers to act in the interests of their constituents instead of entrenched corporate lobbyists, and just focus on the developing world instead.

I also got to attend a small group lecture hosted by Toby Ord at my office (turns out, he also is my neighbour).

My favourite interaction this month though was definitely being able to ask Sarah Friar, the CFO of OpenAI the following, at the Oxford Union:

Given recent revelations about the circular nature of investments in the generative AI market and OpenAIs dependency on extremely high investments from this circular system, including 100B from NVIDIA to essentially buy GPUs from NVIDIA, what is OpenAIs plan to continue functioning if there is a market correction (e.g. caused by failing to deliver on promises of eminent AGI or China claiming to develop competitive GPUs) and these investments disappear? Is that why OpenAI is considering incorporating ads in ChatGPT responses? Or is there no plan for this scenario?

Getting called to ask the question was tricky, since the Union president almost always just picks their friends and fellow committee members. I had to really catch his attention, and even then I was only called since Sarah pointed out to the Union president that he was only picking men to ask questions.

Me and some members of the Oxford AI Safety Initiative (OAISI) on our way to ask Sarah Friar some questions at the Union.

Me and some members of the Oxford AI Safety Initiative (OAISI) on our way to ask Sarah Friar some questions at the Union.

Her answer, of course, was extremely corporate and insubstantial. But reading between the lines of what she said, it seems palpably clear that OpenAI is planning to go fully into profit-seeking mode (she even Freudian slipped at one point, referring to OpenAI as a “for-profit” model). They seem to be going fully into ads and other paid service models, especially for programmers who they seem to think would be most willing to pay high prices to access their models.

The shift to an ad-based model is also clear from OpenAI’s organisation structure, as their CEO of Applications is Fidji Simo, who is responsible for introducing ads into Facebook.

It remains to be seen whether this arrangement will be sustainable.

Other Things I’ve Done

I had a quick G7-related presentation at the Canadian High Commission in London (sat on a panel while relevant diplomats asked questions about how they can improve the impact of the G7 Summit held last summer in Kananaskis). At this point, I’ve visited the High Commission so many times that I’m starting to become familiar with the people there, which might be useful in the future.

Back at the High Commission.

I’ve also joined the Oxford AI Safety Initiative (OAISI) Research Roundtable. I am personally quite skeptical of the utility of technical AI safety research, but since I’m working adjacent to this field I think it’s important for me to understand what they are working on.

I mainly just fail to see the theory of chain for how AI safety research can meaningfully achieve its goals (i.e. prevent rogue AI). AI governance is at minimum necessary for this, as any actually useful results from AI safety research would need to be mandated to be applied to LLMs produced by major corporations… I also think most of the danger cases clearly come from deliberate misuse (e.g. I hack OpenAI and prompt get ChatGPT to inject harmful code into people’s requested scripts), instead of self-motivated LLMs.

I also think AI safety research focusses way too much on LLMs when there are AI-influenced decisions that are being made from entirely different models (e.g. major resource allocation via Google Flood Hub, Google GraphCast, various health insurance/crime models etc. that have major problems or are not open source).

I’m also volunteering a bit with Your Party to help reduce the probability that Reform will win the next election. More on that next month, hopefully.

A Bit of Life on the Side

Hosted a Halloween party at my house (we all dressed up like Alec) and attended a Mansfield formal with some old (and new) friends.

We’re all back for the year.

We’re all back for the year.